If 2025 felt like the year AI went from “interesting” to “everywhere,” you’re not imagining it. In McKinsey’s latest global survey, 88% of respondents say their organizations are using AI in at least one business function. That’s basically everyone.

And yet, the part that matters most to you as a leader is what comes next.

Because the same survey says only about 1/3 have started scaling AI across the enterprise. So we have a world where AI is common, but durable business impact is still uneven.

Here’s how I’d explain 2025 in one line: AI usage got easy. Getting value got hard.

Below are the 5 themes I think will separate the winners from the “we tried a bunch of tools” crowd.

1) AI agents moved from buzzword to real work

Most execs spent 2023–2024 getting their teams comfortable with chat. Draft an email. Summarize a doc. Answer a question.

2025 is when the conversation shifted to something more operational: agents.

Think about an agent as “AI that can take a task, plan steps, and execute across systems.” Not just words. Actions. That can mean triaging IT tickets, pulling data from a CRM, creating a first draft of a customer proposal, or kicking off a workflow in your finance stack.

McKinsey found 62% of organizations are experimenting with AI agents, and 23% say they’re scaling agentic AI somewhere in the enterprise. But scaling is still narrow: in any single function, no more than 10% report they’re scaling agents.

The leadership takeaway is simple: agents are real, but they’re early. That’s good news if you act now.

One practical warning, though: agents don’t fix broken processes. They speed them up. If your approvals are a mess, your data is sloppy, or your handoffs are political, an agent will happily run that chaos 10x faster. That’s not automation. That’s a faster failure.

So if you’re going to pilot agents, pick workflows you can actually control end-to-end. Start there. Earn trust. Then expand.

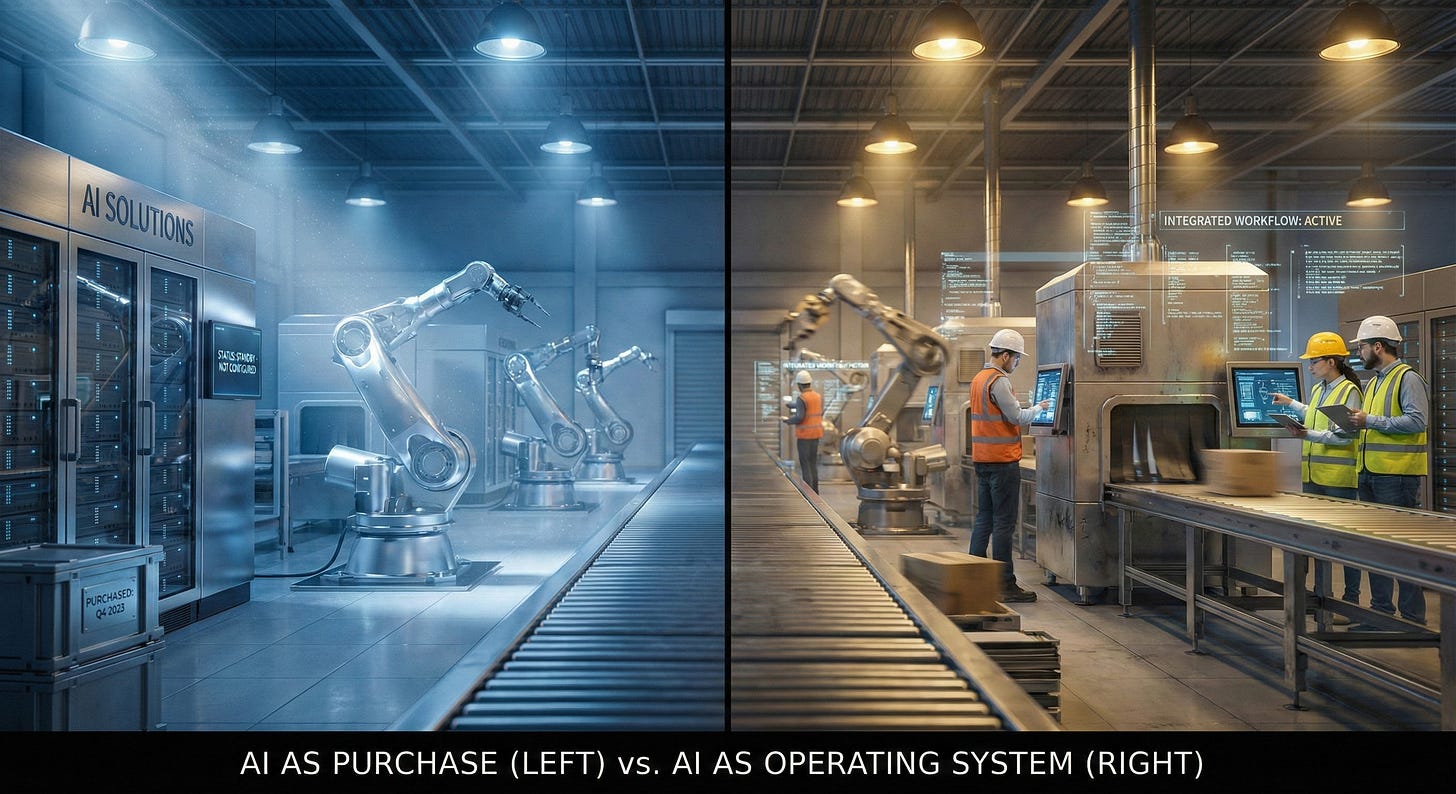

2) Workflow redesign is the line between “AI adoption” and “AI advantage”

This is the one that most teams miss, because it’s less fun than model comparisons.

The companies seeing real impact aren’t “adding AI” to the old way of working. They’re changing the work.

McKinsey’s wording is telling: high performers are nearly 3x as likely to say they have fundamentally redesigned workflows. And McKinsey’s analysis suggests workflow redesign is one of the strongest contributors to meaningful business impact.

Here’s what that looks like in plain English.

Old approach:

You keep the same process, same meetings, same approvals. You insert AI as a helper. People do the same work, slightly faster.

New approach:

You rebuild the process assuming AI is present. You remove steps. You change roles. You move decision rights. You tighten feedback loops. You define what humans must review and what can run straight through.

This is why so many “AI rollouts” disappoint. Leaders buy tools, but they don’t change the operating system of the company. And the operating system is where the cost and speed live.

If you want one uncomfortable truth from 2025, it’s this: AI value is less about prompts and more about process design.

3) Scaling is still the bottleneck (and that’s where leadership matters)

The story of 2025 isn’t “who tried AI.” Almost everyone did.

The story is “who scaled it.”

McKinsey is blunt: while AI use rose to 88%, “nearly two-thirds” say they have not yet begun scaling AI across the enterprise, and “approximately one-third” say they have begun to scale.

That gap exists for boring reasons that are very solvable, if you treat them like real work:

Data access and permissions are messy.

Security and legal reviews are slow or unclear.

No one owns the end-to-end workflow.

Teams don’t have shared evaluation standards, so every pilot restarts from zero.

People are scared of being blamed for mistakes, so they keep humans in every step. That kills throughput.

There’s also a cost story here that changed fast.

Stanford’s AI Index shows the inference cost for GPT-3.5-level performance dropped 280x from Nov 2022 to Oct 2024. The economics of “putting AI in production” got dramatically better, quickly.

So if scaling is still slow, it’s usually not because the model is too expensive. It’s because the company isn’t set up to ship.

This is a leadership problem, not a tooling problem.

4) ROI became non-negotiable (because spending kept going anyway)

Most boards are past the “should we do AI?” phase.

Now it’s: “What are we getting for this money, and how do we know?”

McKinsey reports 64% of respondents say AI is enabling innovation. That’s encouraging. But only 39% report any EBIT impact at the enterprise level, and most of those say it’s under 5%.

That right there is the tension of 2025: leaders feel momentum, but hard financial impact is still emerging.

And yet, spending continues.

A recent CEO survey covered by The Wall Street Journal found 68% of CEOs plan to increase AI investment in 2026, even though fewer than half of AI initiatives have produced returns exceeding their costs.

So what should you do with that?

Stop treating ROI like a one-time business case. Treat it like a product dashboard.

In practice, I like to see 3 layers of measurement:

Workflow metrics (fast feedback): cycle time, handle time, backlog size, error rate, rework.

Business metrics (what you tell the CFO): cost-to-serve, conversion rate, churn, win rate, time-to-cash.

Risk metrics (what keeps you out of trouble): hallucination rate in high-stakes use, policy violations, data leakage incidents, fraud attempts detected.

If you can’t measure it, you can’t improve it. And if you can’t improve it, pilots become permanent hobbies.

5) Risk, security, and regulation moved to the board agenda

2025 was also the year more leaders stopped treating AI risk as theoretical.

McKinsey found 51% of respondents from AI-using organizations report at least one negative consequence, with nearly one-third reporting consequences tied to AI inaccuracy.

That maps to what many of us saw in the field: AI is useful, but it will confidently produce wrong outputs. And in the wrong workflow, “confidently wrong” is expensive.

Then there’s the external environment.

Stanford’s 2025 AI Index reports that in 2024, U.S. federal agencies introduced 59 AI-related regulations, more than double 2023, and it also highlights large national investments (including China’s $47.5B semiconductor fund).

And regulation is not just a slow background hum. It’s active policy conflict. For example, the AP reported that on December 12, 2025, President Trump signed an executive order aimed at blocking state AI regulations, signaling a messy push-pull between state action and federal posture.

If you’re leading a business, here’s the practical implication:

You need a clear stance on where AI is allowed, where it’s restricted, and what requires human review. Not a 40-page policy that no one reads. A working set of rules that ship with the workflow.

And because fraud is part of this story now, I also want leaders watching the human layer: training, escalation paths, and verification steps. Mastercard’s 2025 consumer cybersecurity survey captures how much anxiety is rising as scams get more convincing. That fear shows up in customers and employees alike.

What I’d do on Monday morning

Pick 2 end-to-end workflows where speed or cost really matters (not “nice to have”).

Assign a single accountable owner for each workflow, with the power to change steps, not just add tools.

Build one agent-assisted version with guardrails, and measure it weekly with workflow + business + risk metrics.

Stand up a lightweight governance loop: model access, data rules, red-team tests, and a clear human-override path.

Train the humans like it matters, because it does: what the tool can do, what it can’t, and what “good judgment” looks like in your business.

AI in 2025 got normal. That’s the headline.

In 2026, the companies that win won’t be the ones that talked about it the most. They’ll be the ones that redesigned the work, measured the value, and shipped safely at scale.

I write these pieces for one reason. Most leaders do not need another summary of McKinsey’s AI survey; they need someone who will sit next to them, look at where work actually moves through their company, and say, “Here is the workflow worth redesigning, here is where an agent might actually help, and here is the measurement system that will tell you if it’s working or wasting money.”

If you want help sorting that out for your company, reply to this or email me at steve@intelligencebyintent.com. Tell me what you sell, where scaling has stalled, and which workflows matter most to your cost structure or speed. I will tell you what I would redesign first, how I would measure it, and whether it even makes sense for us to do anything beyond that first experiment.

PS: It’s the start of the Holiday season, so I think everyone needs a few new photos of Magnus.

Steve Smith

Legal AI consultant with 25+ years executive leadership. Wharton MBA. Helping law firms adopt AI with CLE-eligible workshops and implementation sprints.

Learn more about SteveThis article was originally published on Intelligence by Intent on Substack . Subscribe for weekly insights on AI adoption in legal practice.